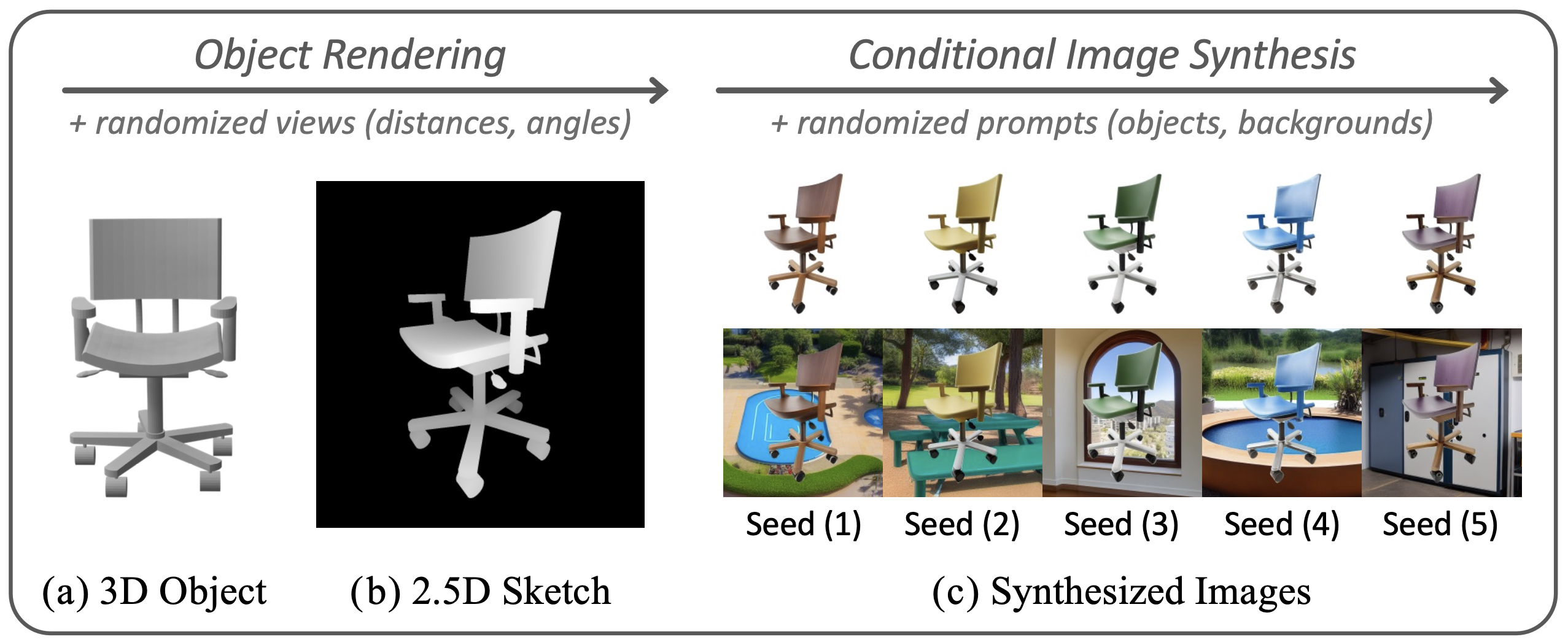

One of the biggest challenges in single-view 3D shape reconstruction in the wild is the scarcity of ⟨3D shape, 2D image⟩-paired data from real-world environments. Inspired by remarkable achievements via domain randomization, we propose ObjectDR which synthesizes such paired data via a random simulation of visual variations in object appearances and backgrounds.

Our data synthesis framework exploits a conditional generative model (e.g., ControlNet) to generate images conforming to spatial conditions such as 2.5D sketches, which are obtainable through a rendering process of 3D shapes from object collections (e.g., Objaverse-XL). To simulate diverse variations while preserving object silhouettes embedded in spatial conditions, we also introduce a disentangled framework which leverages an initial object guidance.

After synthesizing a wide range of data, we pre-train a model on them so that it learns to capture a domain-invariant geometry prior which is consistent across various domains. We validate its effectiveness by substantially improving 3D shape reconstruction models on a real-world benchmark. In a scale-up evaluation, our pre-training achieves 23.6% superior results compared with the pre-training on high-quality computer graphics renderings.

To effectively deal with the trade-off, we propose ObjectDRdis which disentangles the randomization process of object appearances and backgrounds. The proposed framework randomizes object appearances and backgrounds in a separate manner, and then integrates them. Using this disentangled framework, we leverage an initial object guidance which significantly improves the fidelity at the expense of monotonous backgrounds. In parallel, we also enhance the diversity of backgrounds by synthesizing random authentic scenes without being constrained by objects.

For the purpose of better visualizations, we draw bounding boxes with red rectangles.

ObjectDR (ObjectDR.official@gmail.com)

@article{cho2024objectdr,

title={Object-Centric Domain Randomization for 3D Shape Reconstruction in the Wild},

author={Junhyeong Cho and Kim Youwang and Hunmin Yang and Tae-Hyun Oh},

journal={arXiv preprint arXiv:2403.14539},

year={2024}

}